Building an all flash datastore - hardware

The ZFS filesystem is around since 2005, and it’s considered by many as one of the great contributions of Sun beside more obvious ones like Java. My personal history with ZFS doesn’t go all the way back to 2005, but rather to around 2013 when I started with software appliances which incorporated ZFS on top of FreeBSD, eg: NexentaStor, FreeNAS. These days I still use it for personal data and storage for the homelab. There may be other interesting filesystems out there right now like the distributed Ceph or vSAN, but I run a single node NAS, and I like to keep it that way. Moreover, I’m used to the insane stability and many features of ZFS.

Recently I wished to improve the performance of my storage by going all - NVMe based - flash. Now ZFS has pretty excellent caching features such as L2ARC and ZIL/SLOG, but nothing can beat making the primary storage as fast as possible. I was faced with 3 challenges here:

- I need more than 1TB of mirrored storage. Since 1TB consumer SSDs are most price efficient right now, this means 4 NVMe devices and I ended up buying 4 1TB Samsung 970 Evo SSDs. The challenge is in how to connect those to a main board without occupying 4 PCIe slots with PCIe to M.2 adapters (U.2 would be nice as well but consumer devices are all M.2). And before you say I should use Enterprise SSDs: I know but this is for a homelab, not for a production deployment.

- There is a bug in FreeBSD 11 with lost interrupts in NVMe (Samsung) devices resulting in long pauses or even hangs. It’s insanely frustrating and supposedly fixed in FreeBSD 12. However, FreeNAS is still based on 11.x. Since ZFS is pivoting away from FreeBSD to Linux, I decided not to wait and move to ZFSonLinux on Ubuntu. The main challenge here is moving the pool and setting up iSCSI for ESXi consumption.

- Make the new setup perform close to native speed. Some tuning is required.

In this post I’ll talk about the first of the challenges above - adding 4 NVMe SSDs to a host. The others are the subject of a follow-up.

Samsung NVMe M.2 SSD. This thing is tiny

Samsung NVMe M.2 SSD. This thing is tiny

Moving to all flash NVMe

The host for my NAS is a dual socket Supermicro Xeon serverboard from early 2017. It doesn’t have a M.2 connector on-board. When I required only a single M.2 SSD in the past I used a simple adapter from PCIe to M.2:

These things are around €20, which is still a lot if you consider there is no logic on the board at all, as its only function is to transport the x4 PCIe slot pins to pins in the M.2 connector - which uses 4 PCIe lanes.

This approach doesn’t scale to 4 devices, as this fills up PCIe slots very rapidly. So I started to search for adapter cards that could handle 4 M.2 devices, which is the limit as 4 M.2 devices taking 4 PCIe lanes each add up to 16 lanes - the maximum for a PCIe slot. The search confused me as some adapters were around €50, and some others around €400. What’s the big difference between these cards, and which one should I get?

Bifurcation

In terms of bandwidth it makes sense that 4 M.2 devices with 4 PCIe lanes each add up to one x16 PCIe slot. However, somehow the x16 port needs to be logically split in 4 independent x4 ports. This splitting, or bifurcation, can be done in the CPU. The crucial element here is the BIOS needs to talk to the CPU to set this up at boot time.

If you don’t have a BIOS that enables bifurcation, you can still split the port on the adapter itself. Going this route requires intelligence in the form of a PCIe switch (or PLX after the main manufacturer) on the adapter, which immediately explains the different prices for the adapters: high for adapters with PCIe switch chip, low for simple adapters that only transport the PCIe slot pins to 4 M.2 connectors.

In my setup with a Supermicro X10-DRi main board I couldn’t find bifurcation settings, but after some research I found Supermicro silently enabled bifurcation for non-Xeon D X10 boards in newer 3.x BIOS versions. A BIOS update was enough to enable this functionality.

I ordered an Asus Hyper M.2 x16 v2, loaded it up with 4 SSDs, installed it, and powered it on.

SSDs installed on a Asus Hyper M.2 x16 v2 (without heatsink cover)

SSDs installed on a Asus Hyper M.2 x16 v2 (without heatsink cover)

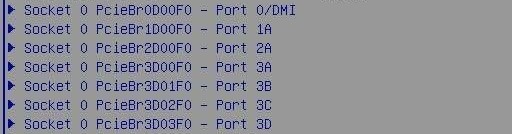

One last puzzle: the BIOS mentions bifurcation settings of PCIe ports, not slots:

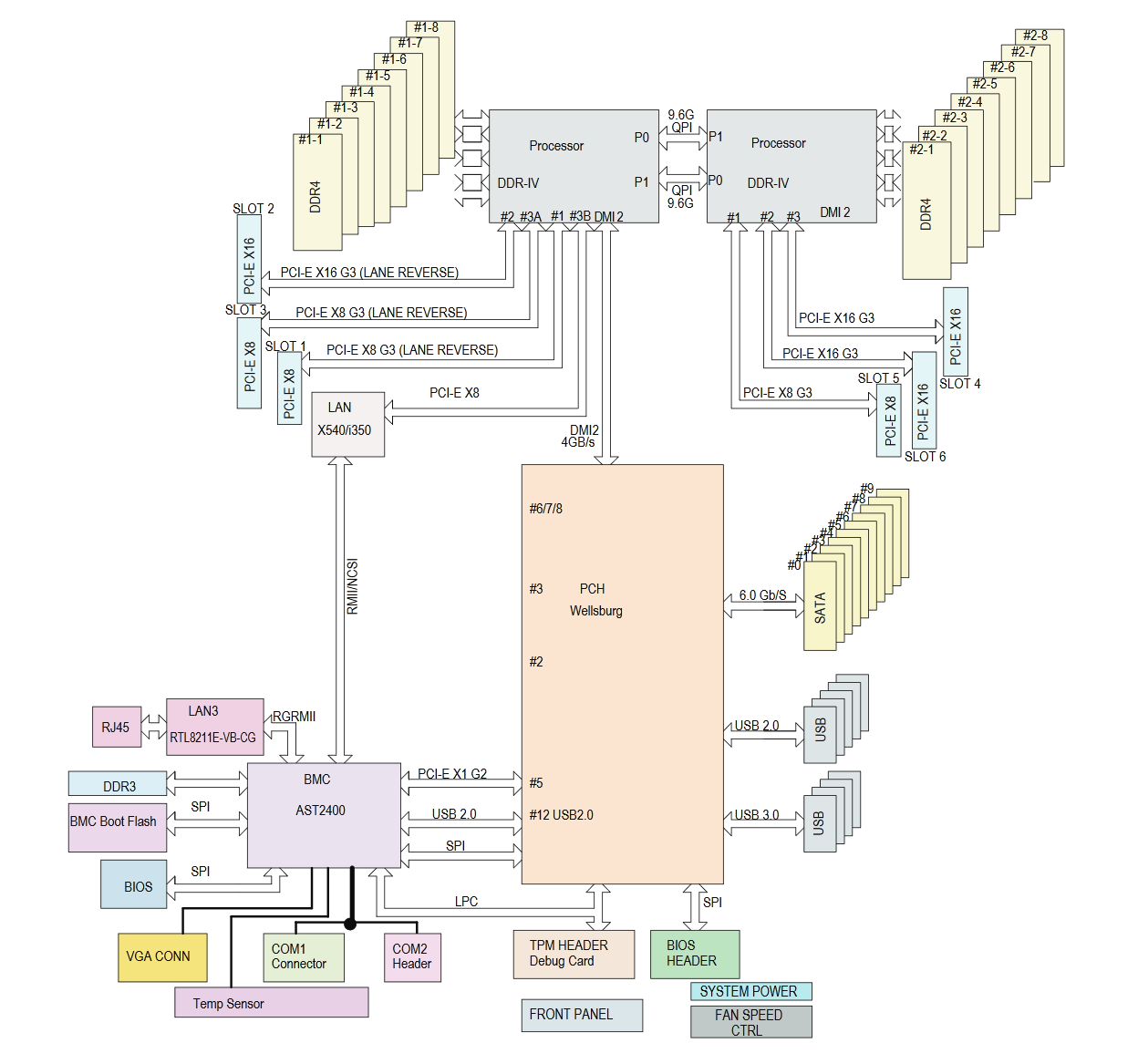

So how do I know which port to set to x4x4x4x4 instead of x16? It all makes sense if you consider the PCIe slot is a physical main board concept, whereas a PCIe port is a CPU concept. So we have to find the mapping between ports and slots. We need the main board manual:

Mainboard block diagram. Note the CPU PCIe ports indicated with a # are connected to PCIe slots with independent numbering

Mainboard block diagram. Note the CPU PCIe ports indicated with a # are connected to PCIe slots with independent numbering

In my case, I installed the adapter in slot 2, which means I had to setup bifurcation for port 2 on CPU 0. In this case both are numbered 2 but this is just a coincidence. To illustrate this point: port 3 of CPU 0 is already bifurcated out of the box in x8x8: #3B provides 8 lanes to the onboard network chip, and another 8 lanes from #3A go to slot 3.

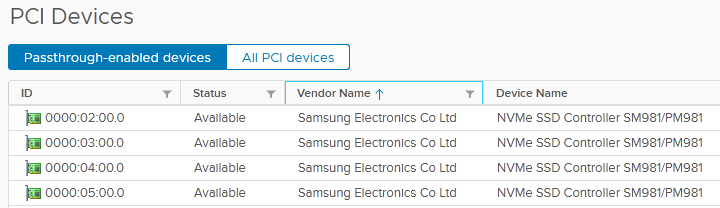

As I run my storage as a virtual machine on ESXi, the last thing to do was to boot ESXi and passthrough the 4 x4 devices to a VM:

Speed

To get a baseline for the performance, I did some measurements on the raw device speed. A quick way to do this is with fio:

$ fio fio --ioengine=libaio --direct=1 --name=test --filename=/dev/nvme0n1p2 --iodepth=32 --size=12G --numjobs=1 --group_reporting --bs=4k --readwrite=randread

| Mode | Blocksize | IOPS | Bandwidth (MB/s) |

|---|---|---|---|

| random read | 4k | 184k | 752 |

| random write | 4k | 165k | 675 |

| random read | 64k | 23.9k | 1566 |

| random write | 64k | 25.8k | 1694 |

| random read | 1M | 1544 | 1620 |

| random write | 1M | 1616 | 1695 |

It’s clear the numbers aren’t as advertised, as these drives should be able to push 500k random read IOPS at 4k blocksize at queue depth 32. I’m not sure where the difference comes from, but the numbers are good enough to continue.

In the next post I’ll describe how to move from FreeNAS/TrueNAS to ZFS on Linux, and will check the performance before we connect to ESXi.