Building a passively cooled NAS

Ever since I retired my old NAS, I’ve been running my home automation (unifi controller, pi-hole, dns, domain controller) and personal storage (NAS) on the same host as my testing setup (’lab’). The whole setup idles at ~95 Watts, which isn’t too bad when I would actively use the lab all the time, but more recently the whole setup hasn’t functioned as more than an expensive 24/7 electrical heater.

So it was time to set up a new dedicated NAS to seperate the functions again.

Requirements

The main requirements are silence and low idle power. All requirements in order of importance (high to low):

- Sound: fanless cooling

- Power: low (~35W) idle power (not for cost but to help with the above)

- Drive ports: 4+ SATA3, 2+ NVMe/PCIe x4

- PCIe: x16 expansion slot (for 10G+ networking)

- RAM: ECC, min 64G support - bonus: RDIMM support

- CPU: virtualization extensions including IOMMU

- Price: <€500 in parts (excluding drives)

- Remote management: nice to have - bonus: out of band (IPMI/iLO)

- PCIe: CPU assisted ‘bifurcation’ (splittable lanes, e.g.: x16 vs. x4x4x4x4)

Commercial product or DIY?

The closest to the requirements I got when searching for commercial offerings was the Synology 1621+, based on a Ryzen Embedded CPU. Synology products are good looking, good build quality, and they just work. However, the 1621+ does have a fan, no ECC support, no 10Gbe networking, SO-DIMMs, and starts at €900.

I wasn’t going to get that silent, low power, cheap and performant NAS this way, so I had to resort to building one myself.

Platform options

The quest for a good platform (mainboard+CPU) turned out to be quite a challenge. As I’m charmed by AMD’s Zen developments, I started my search there. The platforms I considered, and why I did NOT pick them:

- Ryzen Embedded (V2516) - the only form factor commercially available is ‘NUC like’: boards lack extensibility (not even 4 SATA/NVMe, let alone PCIe).

- Epyc (7232P) - awesome platform but misses the target with an idle power expected to be around 100W

- Ryzen APU (4300GE) - only available for OEMs, and only 8 available PCIe lanes as AMD iGPU takes 8 lanes

- Ryzen (Ryzen 5 3600) - no PCIe bifurcation on lower end chipsets, and the X570 chipsets are powerhogs on mostly expensive boards

- Xeon Scalable 2nd gen (Silver 4208) - too overpowered and pricey, like Epyc Rome

- Xeon E5 v4 (E5-2620v4) - these CPUs are still great and can be had refurbished for bargain prices. The challenge was finding a single socket 2011-3 motherboard without paying 2017 prices. Too challenging it turns out…Also I wasn’t sure I was really going to hit that ~35W idle requirement with a server chipset.

- Xeon D1600/2100 - 2016 CPUs for 2021 prices…I don’t think so.

- Atom C3000 (C3558R) - as with Ryzen Embedded, the boards are really lacking extensibility options. Also the CPU is pretty weak.

- ARM based platforms - there are hardly any mid-range options in the ARM ecosystem (since pretty much forever). The only options are entry level (Raspberry Pi) and top end (Ampere eMAG, Graviton). While Apple’s M1 is a sight for sore eyes and may provoke others to come up with mid-range ARM options, there aren’t any now.

Before I present the final kit, I would like to make a special mention:

- Epyc Embedded (3101) - for around €500 the ASRock Rack EPYC3101D4I-2T ticks all the boxes. This is an amazing option with 2x10Gbe on-board (better than the Supermicro alternatives which waste PCIe lanes). What kept me from buying it was the lack of aftermarket heatsink support due to the proprietary SP4r2 socket, which would force me to have (case) fans after all. This is really a shame because it was my preferred option. The only thing that could make this better is a mATX form factor option for more extensibility and choice in NICs.

So by elimination, I settled for my final option, which consisted of:

- CPU: Intel i3-9100 (€110)

- Mainboard: Fujitsu/Kontron D3644-B (€130)

- RAM: 2x 16GB DDR4 ECC UDIMM (2x€70)

Why a 9th gen i3? The i3 range traditionally has ECC support (whereas i5, i7 and i9 don’t). The 9th generation was launched in Q2'19, and for now the final generation to include ECC support. Intel decided to drop ECC support from the 10th gen on to create a better market for its much more expensive and feature rich Xeon E series.

The Fujitsu board is based on a workstation chipset (C246), has plenty of extensibility, and is known (praise the internet!) for its low idle power. The main drawback is lack of out of band (IPMI/iLO) management, but fortunately the CPU does come with an integrated GPU for initial setup, which as opposed to AMD iGPUs doesn’t lower the available PCIe lanes. An associated smaller drawback is lack of monitoring (thermal/voltage) support in VMware ESXi.

Other parts

I had an unused Fractal Design Define R5 case, Seasonic 660W PSU and Noctua NH-D14 heatsink sitting around, so I used that to see how far this would take me. Eventually, I plan to build this in a dedicated case. It’s essential if you want to squeeze out the last idle Watts to also have a high performing power supply matched to the load, but that’ll also have to wait.

The total system bill of material:

- Intel i3-9100

- Fujitsu/Kontron D3644-B

- 2x Samsung M391A2K43BB1-CTD (16GB DDR4 ECC UDIMM)

- 2x Western Digital Red 4TB spindles (WD40EFRX)

- Intel 320 Series SSD (ZFS Cache)

- Samsung 970 Evo 1TB NVMe SSD (local storage)

- SanDisk 32GB USB (ESXi boot disk)

- Noctua NH-D14 (without fan)

- Mellanox/nVidia Connectx-3 40Gbe ethernet adapter

Building

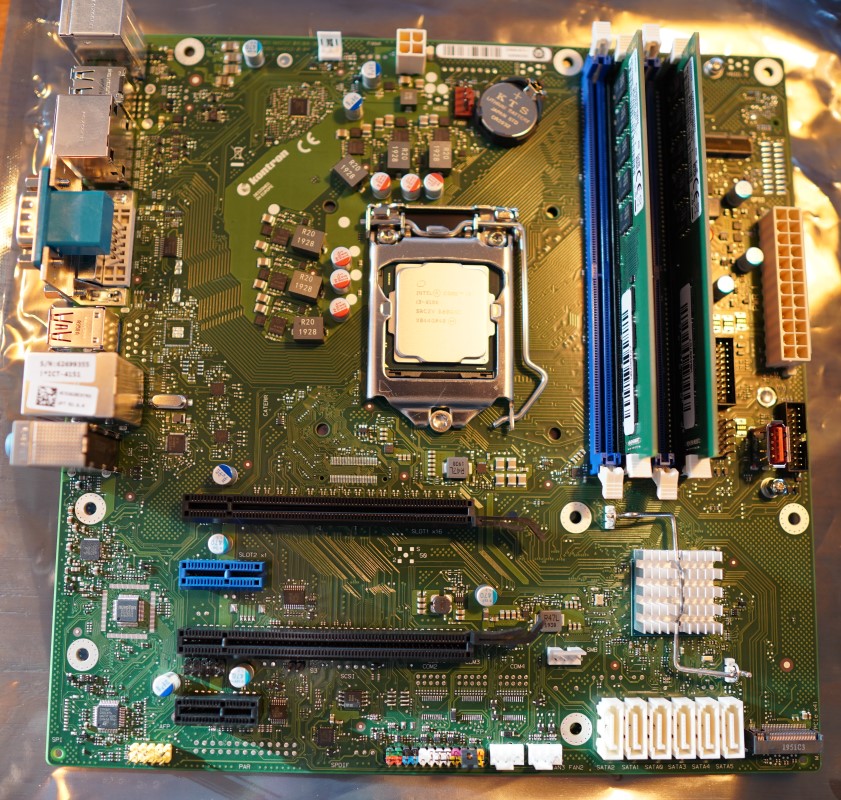

Motherboard with CPU and RAM installed:

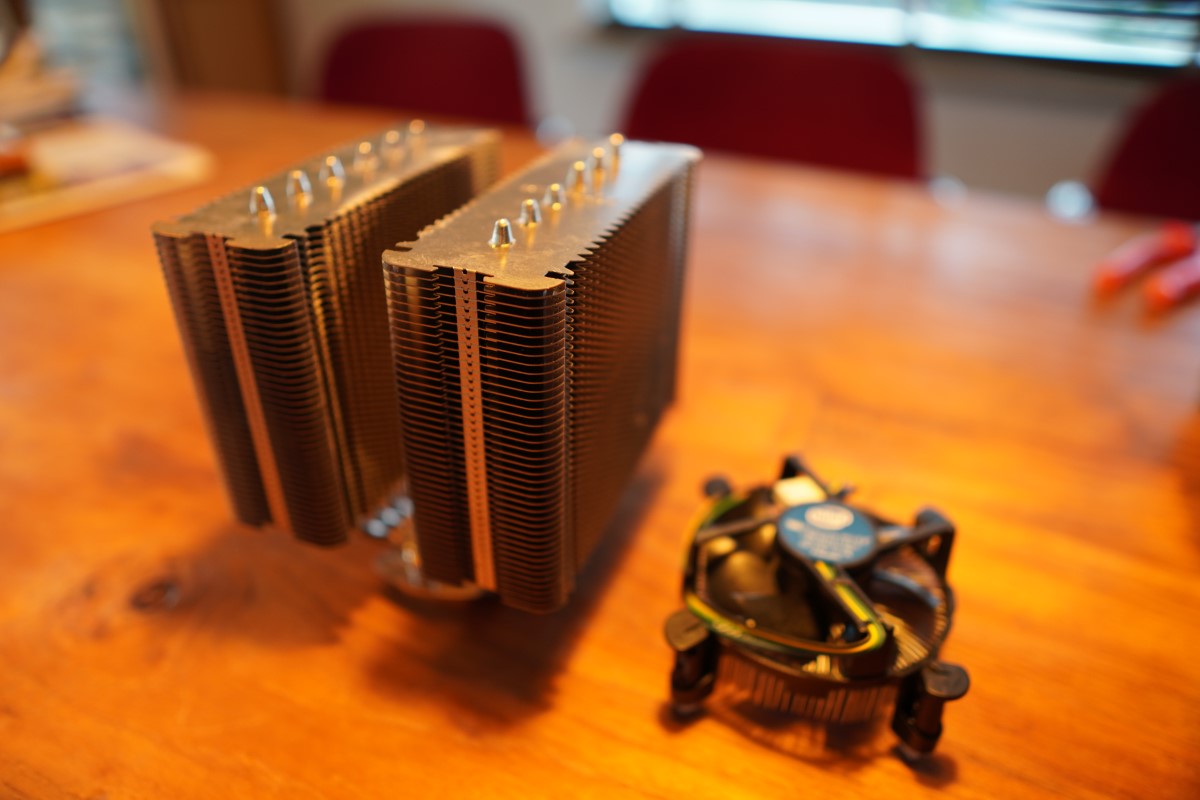

Stock i3 vs. Noctua’s guargantuan NH-D14 cooler which may even be enough to passively cool this CPU:

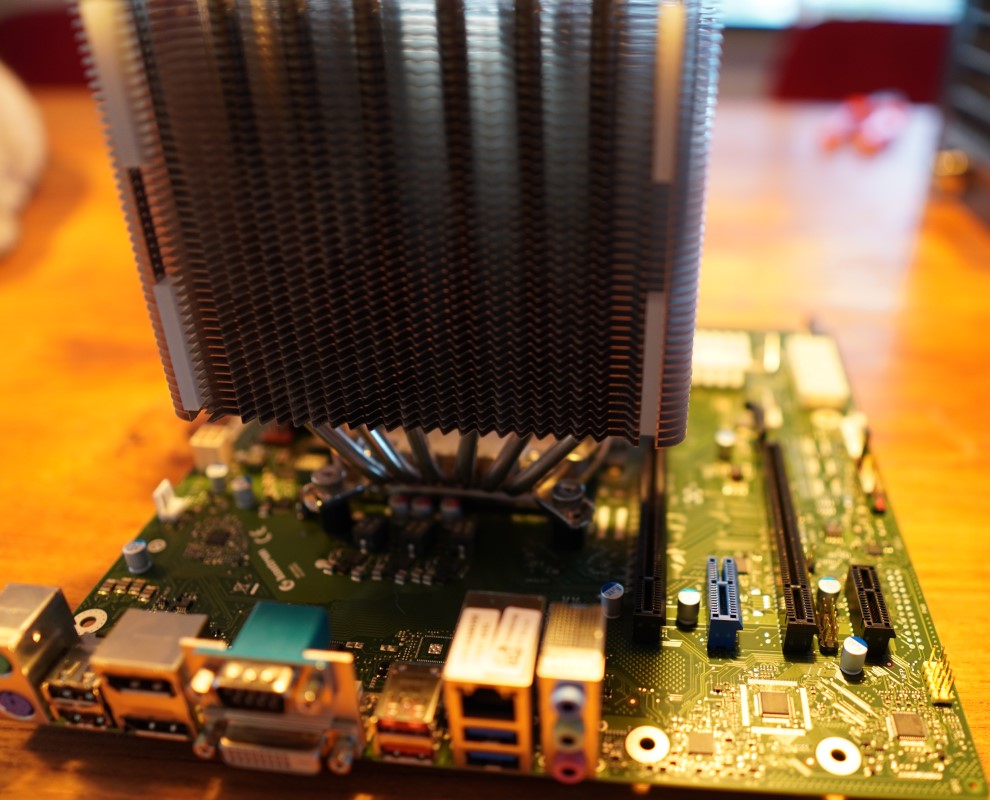

Also I have a problem if I ever need that PCIe x16 slot…

The completed build:

Testing passive cooling

The system is now passively cooled, but not doing much. To test if the heatsink was up to the job of cooling the i3 passively, I ran a stresstest stressing all cores at 100% for a couple hours. To help natural convection, I opened up a fan mount in the top of the case. The highest CPU temperature I recorded - after running at 100% for hours - was 68 Celcius (48 degress above room temperature).

How many watts?

I installed VMware ESXi 7, and run 6 VMs: Unifi controller, domain controller (Windows Server), VMware vCenter Server 7, nameserver/ad-blocker (bind/pi-hole), and a Linux jumphost. The 6th VM provides the data services, and has the motherboard AHCI controller (SATA) passed through to access the SATA disks directly.

The numbers (as measured with an inline APC UPS):

- 100% load: ~60 Watts

- OS idle: ~31 Watts

- OS idle (hd-idle): ~24 Watts (no spinning WD Reds)

Next steps

I’m quite pleased with the result so far: complete silence and low power draw. I’m currently looking into a more NAS like case, a more efficient power supply, and replacing the spindles with SSDs when prices drop some more.